Table of Contents

A small robot, made of little more than two wheels and four cables, moves across a table. As it passes the people sitting at the table, the robot stops in front of someone who is smiling and says, in a metallic voice, “You seem happy: tell me why you’re so happy!” If, however, a person is down in the dumps, it asks “You seem sad: would you like a hug?”

How can such a basic gadget recognise human emotion? Where is it hiding the powerful processors and enormous databases required to do this?

Cloud-powered

In actual fact, this enormous computing power is not found inside the machine; it is stored in Google’s cloud, the collection of remote computers that the company provides for its users and developers.

The empathetic robot uses the power of the cloud to recognise facial expressions, thanks to one of the tech giant’s most innovative applications: Google Cloud Vision.

This product allows developers and hackers to harness the power of the algorithms that underpin Google Images. The abilities of Google’s eye are endless: from guessing the correct breed of dog to counting how many people there are in a class photo; from telling a pumpkin and a football apart to pinpointing the location of a lake in an old photo.

Moving images

Although Google Cloud Vision is only one year old, on 9th March Google unveiled a new development: Google Cloud Video Intelligence. This system applies some of the functions of Vision to videos, greatly expanding your options when searching audiovisual content as a result.

It is no coincidence that one of the first companies interested in Google Cloud Vision was AeroSense. This drone company records thousands of images every flight, and watching them all back to identify the objects photographed would be impossible. It is in situations like these that Google’s automated vision can prove indispensable.

Algorithms that learn from data

Google Cloud Vision’s secret lies in the machine learning algorithms that made the search engine’s name. Google uses the enormous quantity of data at its disposal to train its algorithms.

When we search for the word ‘dog’, Google Images brings up millions of images of dogs. Not because the algorithm understands the concept of a dog per se, but because it has learnt to recognise the animal by comparing an enormous number of images.

The company has provided users with a simulator which allows people to explore its galaxy of images and get to grips with how Google Cloud Vision works. And the Mountain View company is not the only firm to jump on this bandwagon. Amazon, for example, has launched a rival product: Amazon Rekognition.

Text and images

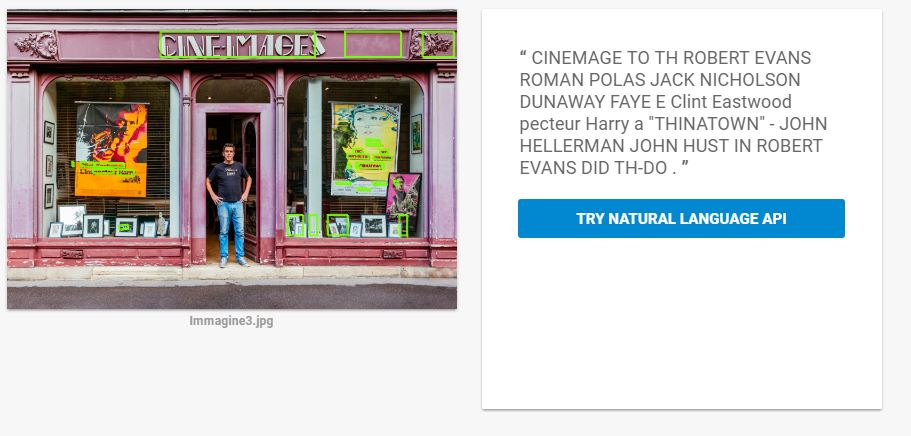

Computerised vision has some genuinely surprising applications. In the following video, a robot built around Raspberry Pi uses Google Cloud Vision to classify Halloween treats as delicious or horrible. The software can recognise the brand of snack thanks to an algorithm that extracts text from images.

Google Cloud Vision can interpret images in many different ways:

- Object identification: the software recognises flowers, animals, vehicles and thousands of other categories that occur frequently in images.

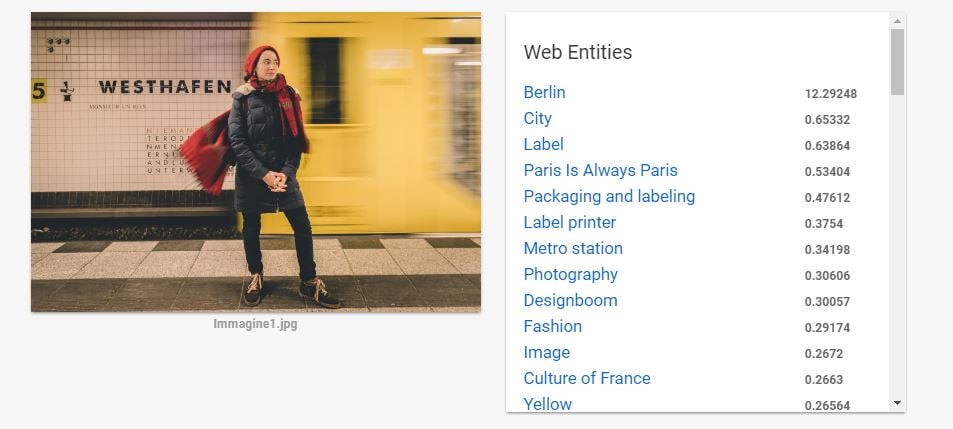

- Location, person and brand identification: the program taps into its database to identify famous places, both natural and man-made, such as mountains or buildings. The same mechanism can also be used to identify celebrities or brands.

- Inappropriate content: adult or violent content can be detected by Google Cloud Vision, helping to moderate visual content on a large scale.

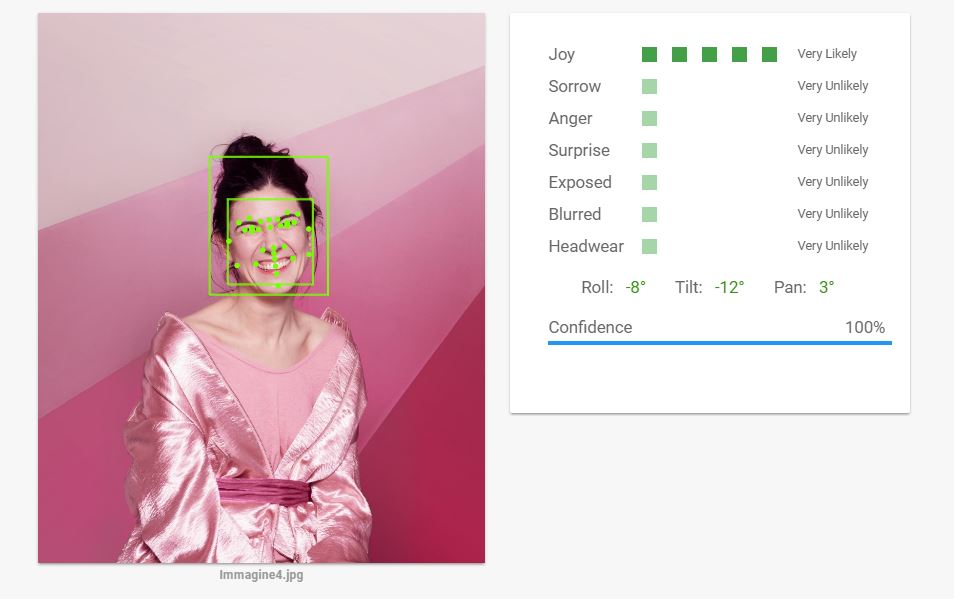

- Face identification: this is the most spectacular application of the technology. Google Cloud Vision can identify the presence of one or more human faces within a photo, as well as deducing eight different expressions (happy, sad, angry, etc). The system knows if the photo contains a face, but it is not programmed to recognise systematically whose face it is.

For hackers and researchers

Google Cloud Vision opens up surprising possibilities for hackers. For example, you can pair it with Google Translate to learn how to say ‘glass’ in Chinese just by taking a photo of the object with your mobile.

Using the software in collaboration with various fashion experts led to the creation of a system that automatically detects the subculture of a passer-by with an eccentric appearance.

Disney used the program in an augmented reality app that makes the dragon from its latest film appear on your sofa. The researcher Kalev Leetaru, meanwhile, used it to analyse the most common situations in dozens of election campaign videos and the locations photographed most frequently by the international press.

Google’s eye promises to change the way that everyone sees the world. Will the next application be built by you?